AI Ethics: When Smart Algorithms Should (and Shouldn’t) Be Used

Artificial intelligence can help in many areas, from legal contracts and business to medical examinations. But is it worth sharing the most intimate, ‘too human’ things with it—feelings, ideas, plans, developments, and innermost desires? Anna Kartasheva, a researcher at the International Laboratory for Applied Network Research at HSE University, discusses this in an interview with IQ Media.

Anna Kartasheva

Researcher at the International Laboratory for Applied Network Research at HSE University, Candidate of Sciences in Philosophy, Director of the Delovaya Kniga Publishing House

Reconciling Human and Algorithmic Capabilities

What is ethics in artificial intelligence?

Benchmarks are an important concept in the ethics of intelligent systems. A benchmark is a model against which a system is compared and a conclusion is drawn as to how well it meets the specified characteristics.

The ethics of intelligent systems is about how to measure a system’s alignment with human needs, not about who is good and who is bad. There is an area in English-language science called ‘AI alignment.’ What does it mean to ‘align a system’? There are human interests and needs, and a system has guiding rules. It is necessary that our interests and the system's interests coincide, that the system obeys us, that it has no interests humanity does not have.

Long-established ethical diagnostic sets are not suitable: there is too much uncertainty in the criteria for evaluating AI performance. However, it should be noted that assessing ethics is only a small part of this; one can measure anything: how a large language model reasons or how it solves mathematical problems. For instance, many models work better with text than with mathematics—they have problems with logic or with reading diagrams, for example.

Ethics vs Functionality

AI is often used in jobs that involve independent thinking.

Each algorithm has a specific architecture that imposes a set of constraints. Every answer from a language model is based on prior knowledge. It provides the most likely answer to a given question. This is why its answers are often so impersonal.

Another aspect is the lack of feedback. If you ask a language model who the mother of actor Tom Cruise is, it will say Mary Lee Pfeiffer. But if you ask it who Mary Lee Pfeiffer's son is, it will not know the answer. Why not? It is due to a lack of feedback and a lack of information to make inferences. There is a lot of information about Tom Cruise, so it is easy to make an inference; but there is not so much information about his mother. To us, these ‘phenomena’ appear to be related, but not to a language model. To it, the more information there is, the more likely the inference is. Some tasks are great to solve with the help of AI, while some tasks should not be solved this way.

For example, it is better to write an essay without using a model because that is the only way one can come up with ideas that have not existed before. AI cannot generate them. Its generation of ‘new’ ideas is based on a combination of old ones.

AI can brainstorm with a human and provide interesting conclusions, but it will not be able to come up with anything radically new, nor will it be able to make logical connections. There will be issues, as with Mary Lee Pfeiffer or solving mathematical problems.

However, there are many tasks where large language models should definitely be used: in analysis, when we are dealing with large amounts of data; in content analysis, when we need to find patterns in large amounts of material.

There is the ‘copilot’ concept, where an AI can brainstorm with us, help us with market research, or come up with a list of topics for posts on social media, for example, on psychology. And these topics will be very popular with readers (after all, the model is based on data related to what the audience is most interested in), but there will not be anything completely new here. These limitations must be kept in mind. Of course, ethics is involved here too (do not use what is not yours!), and this is by no means secondary. But when we talk about education, what’s more important is to show how and where AI can be used effectively.

Algorithm as Interlocutor and Teacher

So, the key is to use AI in a targeted manner?

Yes. But there is another important thing. Technically, the copilot concept is about the possibility of self-reflection. When we interact with a language model, we are communicating with ourselves one way or another—it is like seeing ourselves in a mirror. We look into that mirror and we see ourselves. But if we dislike the reflection, maybe we should work with the original, pay attention to the development of our skills?

Neurogymnastics, creative imagination, and memory development techniques are popular now because we sometimes lack these things and would like to develop them. The ability to reflect using AI is extremely valuable. It allows one to test their hypotheses and ideas and strengthen their arguments. It would be great to teach this to students.

Let's say I have a hypothesis and it needs to be tested. Friends are not always willing to do this. Teachers often do not have the time for it either, because it requires a deep, reflective dialogue with each student. Education is a process of saying to a student, ‘Do you think that is so? What if it isn't?’ You give prompts that gradually lead them to realise this or that. AI assistants that can engage in meaningful dialogue with students (for example, monitor how well material has been learnt) and adapt to each person with their individual learning pace could be a quantum leap in education.

Trust AI with Your Schedule

We often place almost unquestioning trust in a wide range of apps for fitness, nutrition, healthy sleep, and daily routines. How ethical is that?

It’s a good question. Let's not forget that all the AI assistants available today are commercial products whose purpose is to sell something. They are modelled to create a sense of scarcity. Let's say you are driving and you see a route and a destination on the map, and there's a popular coffee shop nearby that you could go to. It might seem that the cafe's presence on the map wouldn’t influence your choice, but somehow the venue increases its profit. In other words, there are always some companies with their own interests behind artificial intelligence technologies.

Here is another point: what if the data and documents we upload to a chatbot while communicating with it are confidential? What if we are not supposed to upload them, and we do so without thinking about where they are going? Everyone should be aware that in this case, there is a possibility of data leakage. This raises some ethical issues that concern all interested people, communities, and AI actors. There are specific documents outlining the importance of ethical behaviour by actors: developers, users, organisations, and so on. The AI Alliance has developed a relevant Ethics Code.

Is information hygiene needed in work with AI?

Yes, caution and reasonableness are needed. It is one thing to draw up a travel itinerary, daily schedule, or daily menu (although there are subtle aspects to those as well), and quite another to do something that involves data, especially if you have signed an NDA [non-disclosure agreement]. Being ethical means being aware of these issues, first and foremost.

Shifting the Weight of Decisions

There is also an ethical dimension to the idea of passing responsibility to an intelligent algorithm.

Yes, when we hand over the steering wheel of our lives to a system that decides for us. However, it is often necessary. For example, there are systems in cars that decide to stop before the driver realises it is necessary—for safety reasons. And in that case, the person has to rely on the system.

On the other hand, consider the story of the Boeing 737 MAX 8, which had a design flaw. There were several crashes before people realised that there were design problems (incorrect assumptions about how the autopilot would behave in certain situations and how the pilots would react). It is a huge responsibility for the engineers, just as AI is for developers.

It is worth noting that humans tend to shift responsibility to others. It may be worth developing AI technology architectures that would not allow them to be overburdened with responsibility.

Giving Emotions to Smart Technologies

Sometimes algorithms rule our emotions too.

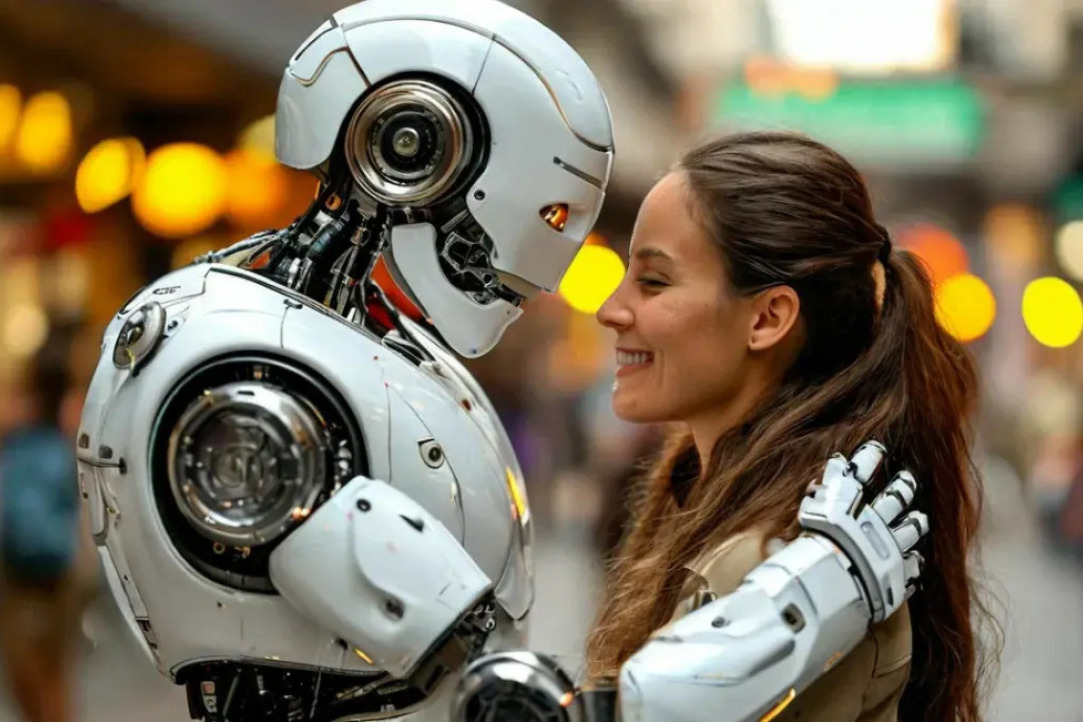

A prime example is affective computing: reading and modelling emotions. This includes social robots that interact with people. Affectiva, a company founded by Rosalind Picard of the Massachusetts Institute of Technology (USA), has developed, among other things, a children's toy—an ‘affective tiger’, a robot that responds to and reads a child's emotions. There is a rich story here: emotions can be provoked. For example, a child may be sad, and interacting with the toy will cheer them up. This is also relevant for the elderly (in Japan, this approach is actively used). But there are many nuances. Is it ethical to entrust human emotions to AI? Research suggests that humanoid robots should not be used in kindergartens; something similar to imprinting occurs and children begin to trust robots without thinking. Robots may be able to take care of the elderly, but it is highly questionable whether they can take care of babies. Also, the ‘uncanny valley’ effect [rejection of anthropomorphic robots] is common in the perception of robots.

In the field of human–computer interaction (HCI), there are theoretical movements that oppose affective computing, arguing for the modelling of emotional experience rather than emotion and for the creation of products that allow people to engage in emotional experience responsibly.

Let's take two examples. Affectiva has a wristband showing a person's emotional state—an affective computing approach. This is where the dictates of technology come in. You feel normal but the wristband tells you, ‘You are sad.’ And you think, ‘I must be sad.’

One approach involving an affective (emotional) experience utilises a smartphone app that allows users to send texts and chat in messengers depending on how they feel. You pick up your phone and if your hand is shaking, messages are sent with a red background. When both you and your friends play this game, they immediately see your emotional state (you have demonstrated it). It is an emotional experience: we consciously share our state through gadgets, but humans are not excluded. There are no dictates from technology. Rather, we have to model the emotional experience and think about how to do it.

There is also a question of how AI assistants should be designed so that they do not ‘coach’ people. The dialogue with intelligent algorithms must not go too far or veer into the territory of ‘How should I live my life?’

On the other hand, we cannot dictate to people: they ask how they should live their life, while we tell them they will not get an answer to that question.

Vulnerability Algorithms

On the one hand, smart technologies make our lives easier. On the other hand, we often trust them with our innermost secrets. They know too much about us.

Consider the story of a large supermarket that sent an advertisement for nappies to the father of a teenage girl. The father went to find out why. The company replied that, according to their information, his daughter was pregnant. This turned out to be true. Her situation was clear from the range of products she was buying.

Another story: a company decided to give presents to its best customers (to maintain their loyalty) and gave them something they had dreamed of but had not mentioned to even their loved ones. The campaign’s effect was the opposite of what was expected: users felt that their thoughts had been read and were unpleasantly surprised. It turned out the company knew information that people would prefer to hide.

Our smartphone ‘listens’ to us and tosses in relevant adverts.

A lot depends on the systems’ design, the tools, and what is allowed and what is not. Incidentally, in Russia, there is a rule that all companies using recommendation systems are obliged to post a document on their websites explaining the reasons for the recommendations.

First Aid through AI

There are psychological chatbots, such as self-help chatbots. How ethical is that?

It would be logical to say that it is not very ethical, but I think it is very humane in terms of the availability of these resources, among other things. A lot of people do not go to psychologists because they are shy, they do not know how to talk about their problems. But they can talk to a chatbot. And if, for example, a psychologist is there ‘on the other side’ and hears that a person has said something dangerous, they can draw attention to it and suggest: ‘Look, there are specialists, they have these available time slots, call them.’ They can refer the user to a specialist and alleviate crises if they arise. Such resources are good as an indicator of problems and for immediate self-help. For example, they can recommend some exercises.

It is important to remember, however, that these are general recommendations, not targeted ones.

The architecture of these systems does not allow for anything else. And there should probably be a notification bar that says: ‘And now please reach out to the following services.’ It is the developers’ responsibility to add it.

Unforgivable AI Mistakes

Do we expect personalisation from these systems?

Yes. Let me tell you a story. A colleague from UrFU and I published an article about the dilemma of recommendation systems’ predictive accuracy. This university had developed a system for predicting the future profession of university applicants. The system was often very accurate, but often inaccurate too. The paradox is that neither the applicants nor the management liked the system. Applicants trusted their grandmother more than any system. It was seen as an ‘oracle,’ but not believed. However, we must recognise that the architecture of such systems often includes the possibility of error. We can forgive a human being for a mistake, but we do not forgive a system. This is demonstrated, for example, by recordings of calls to technical support when people's internet goes down.

But it is also our mistake that we are willing to hand over all responsibility to AI.

Yes, it is not uncommon for us to be willing to rely on it completely, because if something goes wrong, we have someone to blame, and that is very convenient.

Humans do not want to have make decisions all the time.

Yes. Daniel Kahneman has the concepts of ‘system 1’ and ‘system 2’. The first system is easier to switch on and work with, to make decisions like ‘which way to go home’, mechanically. But some decisions cannot be made automatically: which university to apply to, where to work. People want to avoid making these decisions because they are very difficult.

And this is where a magical smart algorithm shows up and decides something for us?

But there is another extreme, when we do not trust when we should. One has to find the way between the devil and the deep blue sea, between trust and mistrust. Besides, cultural aspects should also be taken into account.

Is AI culturally specific?

Yes. For example, ChatGPT has specific political preferences. China has its own AI that adheres to certain cultural values. ChatGPT or Midjourney would have a hard time drawing the flower with seven colours [a Soviet children's tale—transl.] and do not know, for example, the poet Nekrasov—simply because they have not encountered the data. This should be taken into account. Every language model is a reflection of our society, of certain social groups. The same is true for YandexGPT and GigaChat.

Ethical Code: Presumption of Guilt

Let's discuss the ethical rules declared by the creators of smart algorithms.

Some documents set out rules and even ethics as developers see them. Senior developers are very responsible in their activities. As mentioned earlier, several companies have signed the AI Ethics Code and are constantly updating it. Universities also develop their codes of ethics.

I would like to mention the well-known Collingridge Dilemma (from engineering ethics, of which AI ethics is a part), which states that there is a power problem and an information problem. We cannot change a technology until it is mature, because we do not understand what changes need to be made. At the same time, once the technology is widespread, we can no longer change it. The solution to this dilemma may be the precautionary principle: developers must prove that their products will not cause harm. Developers have a huge responsibility, and the risks are also huge.

But their products do touch on the innermost part of a human being!

The cold medical light is switched on, the innermost part is taken apart, dissected, and then put back together again, and it even works. And then the question arises: What is it that we call ‘human’ after all?

Read the interview in Russian on IQ Media